We have been using deep-learning segmentation in RayStation for several years in our clinic. Combined with the scripting interface, this feature not only accelerates our treatment planning process but also ensures a consistent standard of quality, enhancing overall efficiency.”

DEEP LEARNING SEGMENTATION

With the automatic deep learning segmentation module in RayStation®*, such state-of-the-art methods are seamlessly integrated into the clinical workflow.

DEEP LEARNING SEGMENTATION

Many different types of methods have been proposed for medical image segmentation. In scientific literature, the most popular methods of today are based on deep neural networks. Such methods can pick up complicated relationships in data and have several practical features making them suitable for the task. For these reasons, the segmentation methods with the best scores in academic competitions are often almost exclusively based on deep learning techniques.

One attractive feature of deep learning segmentation methods is that the computation time is moved off-line to the training step, while keeping the inference step fast. This means that the time necessary to generate new segmentations is not affected by the number of examples that has been used to train the model, and consequently, that it is possible to encode knowledge from a large dataset of examples while still maintaining efficient performance.

RAYSTATION INTEGRATION

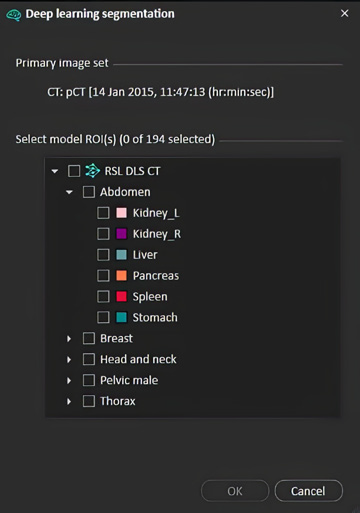

The deep learning segmentation module can be accessed in the patient modeling module. A wide range of anatomical structures are supported, corresponding to various body sites and, for some structures, different delineation protocols.

Figure 1 shows an example of the drop-down menu where the available anatomical structures can be selected. Generating the anatomical structures is fast, usually under a minute, but the exact running time depends on hardware and the number of structures to be generated.

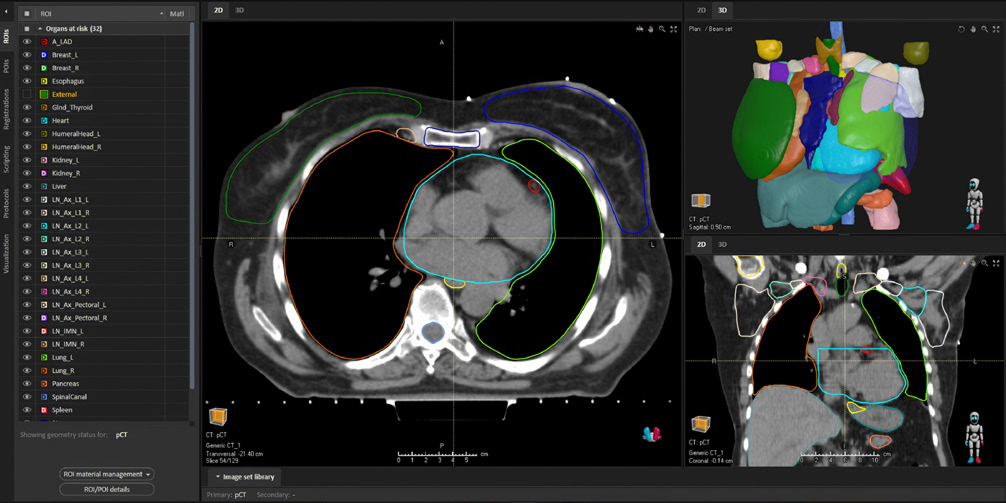

Of high priority to RaySearch has been to integrate the deep learning segmentation functionality tightly with the planning workflow in RayStation®. For example, once a ROI has been generated, it can be edited with the tools available in the structure definition section of the patient modeling module, just as any other ROI in RayStation. Another example is that deep learning segmentation also can be accessed via scripting, which allows for automated batch processing of patients during off-hours.

Deep learning segmentation is included in RayStation and comes with pre-trained models. The models are developed by RaySearch and based on clinical guidelines. Figure 2 shows an example of output that has been generated by the module. From the perspective of privacy regulations, a trained model does not contain any image data from the training data and is executed locally, which means that that the image being delineated stays on premise.

METHOD INFORMATION

The actual deep learning method is based on a fully convolutional neural network with a U-Net like architecture [1,2]. The network operates in 3D and can be thought of as a non-linear function taking a three-dimensional image as input and producing a labeled (segmented) image as output. To select the parameters to the network, a training procedure based on a gradient descent scheme is utilized. Fully convolutional neural network models can combine image features on different levels of abstraction to generate segmentations of high detail. The algorithm will learn the most important features from the training data set during training by iteratively changing the parameters to the model. Since the number of parameters is held fixed, the features can be learnt without affecting the size or runtime of the model. A plan of what models will be released is available in the form of a roadmap document. To get access to the roadmap, please contact a RaySearch representative. The team is also happy to receive requests on functionality that should be prioritized.

REFERENCES

[1] Ronneberger, Olaf, Philipp Fischer, and Thomas Brox. "U-net: Convolutional

networks for biomedical image segmentation." MICCAI 2015.

[2] Çiçek, Özgün, et al. "3D U-Net: learning dense volumetric segmentation from

sparse annotation." MICCAI 2016.

* Subject to regulatory clearance in some markets.

For more information or to see a demo, contact sales@raysearchlabs.com